Guide: Video Encoding, Part 2 - Deep Dive

Each and every year, manufacturers in the consumer electronics space try to outdo each other, all promising to take our videos to the next level. For the most part, they all tend to deliver on these promises and their headlining features tend to mirror each other:

More resolution

More dynamic range

Wider color gamut, more colors

More frame-rate options and more extensive slow-motion

Better and more immersive audio

Log or raw video recording

Gimmicky features like 360° or 3D video

All of this is incredible - but it comes at the cost of larger and larger file sizes. Of course, we expect that as we take more videos, we’ll need more space to store all the footage but there are things we can do to mitigate the storage cost of our media.

I wrote this guide because nearly everything else I found was focused for streaming or recording footage — not what to do with footage that already exists with a goal of long-term archival in mind.

Quick Links

Planning

As I talked about in the previous post, every codec is a compromise between compression, quality, speed and ease of playback. Every scenario is different but since we have to start somewhere, I’ll describe my scenario and walk through the process I took.

At a high level, I’m looking for a way to reduce the storage requirements for my content without taking a substantial hit to image quality.

Any encoding or transcoding will result in image quality loss, but if I can keep the output image quality within a [subjective] margin of the input video, that is acceptable. I will of course consider advantageous gains favoring either image quality (i.e., 1% bigger file size, but 30% better image quality) or compression (1% worse image quality, but 30% smaller file size).

My scenario

My content is generally high-quality

Currently, mostly encoded with high-bitrate H264; moving forward, more content will be captured with H265

Currently, mostly 1080P; moving forward, there will be a greater focus on higher resolutions

Currently almost all of my content is 8-bit and moving forward, there will be more 10-bit content

I have a substantial workload

At my starting point, there was something in the range of 15,000 files to work through

Enough breathing room on storage that this can be a multi year project if needed however

Increasing the available storage capacity is not an option

Reasonably performant hardware (for the time)

Image quality is important: I accept the initial one-time hit that comes with any transcode, but it must pass the "‘eyeball test”

Use cases

Encoded content must be accessible in the current timeframe

Content is entirely for my consumption so I don’t need to worry about whether or not others have decode acceleration or not; on the off chance I upload to something like YouTube - their servers will re-encode the video back again so that the masses can watch it

Candidate Codecs

H264 (CPU/GPU)

Since my current backlog is mostly H264, I won’t see any meaningful compression gains going with H264

Transcoding H265 content to H264 may be something that some people need to consider if their playback devices don’t have hardware acceleration to decode H265

H265 (CPU/GPU)

H265 promises up to 50% compression advantage at ‘the same’ image quality so this is a no-brainer to at least consider

Even for re-encoding the existing my H265 content at lower settings, I can leverage the advanced nature of the codec to go after large compression gains for relatively small quality losses

H265 encoding is noticeably slower than H264

Decode acceleration is generally widely available for H265

AV1 (CPU only)

AV1 promises up to 67% compression advantage versus H264 at ‘the same’ image quality

I’ve heard that AV1 can be quite a bit slower than even H265 encoding.

As of Q1-2021, there isn’t much in the way of decode acceleration for AV1 — only the latest generation of graphics cards have acceleration for AV1. If the compression levels are substantial enough, I may be willing to overlook this (since most of my playback devices have enough horsepower to decode AV1 without the need for dedicated acceleration)

For my specific use case, the frontrunner will be H265 and it’s just a matter of doing some tests to see what settings make sense and whether using the GPU to do the heavy lifting is a viable option.

Testing Scenario

The ultimate goal is to identify a combination of encoder, codec and settings that substantially compresses my videos down without too much of a hit to image quality and without taking forever. In an ideal world, we would have infinite computing power and could just check all of the possible permutations…. Back in reality, using a representative clip to test as many configurations as you find feasible is a good stand-in. In my case, to get an ‘apples-to-apples‘ comparison, I started with a video trailer:

Available in all of the common resolutions: 480, 720, 1080, 2160

Nearly identical clip across all of the resolutions (3504-3660 frames) showing the same content

All of the clips were 8-bit, high-bitrate H264/AVC which is representative of the bulk of my content

I ran through a wide selection of transcode scenarios: I muxed the audio stream (aka ‘no processing’) to isolate this to just the video workload (generally audio encoding is super-fast anyways). For each clip

GPU — NVENC: H264 and H265 using 6 quality settings each

CPU — H264, H265 and AV1 using 10-30 quality settings each

The end goal is to objectively identify three properties:

Compression performance: expressed as a % of how much smaller the output file was, compared to the original source - there are some cases where the output file can be bigger than the input file, so that’s something to watch out for

Encode performance: expressed as an averaged frames/sec; each frame will take a different amount of time to encode but this will give us a starting point. Generally speaking, 24 fps is real-time (since most films are shot at that framerate); if I can encode at 24 fps, this means a 30-minute clip will take about 30-minutes to encode.

Calculated image quality: expressed as an overall average VMAF score, this attempts to quantify the perceived image quality of the entire clip. I actually collected PSNR, SSIM and VMAF data for each frame, in each clip, in case I want to dive deeper.

The ultimate goal is to find a combination of encoder, codec and settings that allows me to compress my footage down without too much of a hit to image quality and without taking 10x-realtime. I’m specifically looking with long-term archival in mind (rather than streaming or uploading to YouTube etc.)

Limitations

I use the same machine for all of the tests (see here for Q1-2021); unfortunately my current configuration does not give me access to AMF or QuickSync GPU encoding without fundamentally changing the machine. I suspect that:

NVENC is the most performant of the three major GPU options (it’s certainly the best-supported)

General conclusions I arrive at with NVENC will apply to AMF and QuickSync

I may revisit AMF/QuickSync (using fundamentally different hardware) as an addendum.

A word about Image Quality

I used the VMAF 4K data set when analyzing the encoded output files. Normally the 4K model is reserved for 4K content (duh) but with 4K (or higher resolution) displays becoming more and more prevalent, it made more sense to use the 4K model to compare all the results. After all, if you happen to be watching 1080P content, you’re not going to put your 4K TV away and go grab a 1080 display to watch it!

Data Collection

GPU Encoding - NVENC (H264 & H265)

NVENC is the hardware encoder that is included with nVidia-based graphics cards. Exactly what codecs and details supported by NVENC will vary depending on the specific generation of hardware you have: you can find the list of supported codecs for encode and decode acceleration here.

Generally speaking, since our starting point is already H264, there’s no reason to opt for H264 if you have access to H265. There are two notable exceptions that apply to older/weaker playback devices:

If you need to resize (usually, downscale) a video so that the weaker device can play it or,

If your starting point is a more advanced codec like H265 or AV1 and the playback device struggles to decode it

In both of these cases, I might suggest that compression and (to a degree), image quality don’t matter since you’re likely going to be transcoding just so you can play the video ‘just this once’. Over time, as your playback device gets more powerful, you’ll be able to easily play the original video clip.

Data Deep Dive

With GPU encoding, there is always the risk that your output file ends up larger than your input file. If you’re transcoding from a more complex codec to a simpler one, that is to be expected but in this data set, I’m never doing that. It is easier to run into this scenario at lower resolutions — but if you crank the quality setting up, you can run into this, even at higher resolutions. It’s important to consider your priorities (compression, image quality, speed etc.) at the specific resolution of your content.

The following data deep dive is a collation of 48 encode runs capturing the following permutations:

4 resolutions (480, 720, 1080, 2160)

2 codecs (H264, H265)

6 quality settings (15, 18, 21, 23, 27, 30) — (Note: the lower the number, the higher quality)

Conclusions

If you use NVENC for encoding, in general, it makes the most sense to leverage H265 on the higher side of the quality setting — there’s effectively no performance hit (system or encode) and H265 will let you push the quality a bit higher while still maintaining compression.

If you are streaming or doing anything of that nature, going with a GPU encode is almost a predetermined fact just so that you don’t have the hit to system performance.

GPU encoding is a viable option if you need a predictable encode time; for example, if you are compressing security camera footage to reduce the amount of time to upload footage offsite, you don’t want any time-savings from uploading smaller files to be lost by spending forever with an encode!

If you have a relatively weak PC in general then, GPU encoding may be the only feasible option

CPU Encoding - H264, H265 and AV1

When encoding with the CPU, you can pick from almost any codec - the only unavailable codecs are those specifically made for GPU encoding. For my scenario, I was considering H264, H265 and AV1. H264, sometimes referred to as AVC is a fairly mature codec now; this means there are a ton of devices that ship with decode and encode acceleration. (As of 2021), H264 is the default codec of choice for the majority of phones, cameras, drones, dash-cams, doorbell-cams etc. H264 offers a great blend of compression, performance and quality even as ‘next generation’ codecs like H265 and AV1 make their way onto the stage. These more advanced codecs promise an optimistic 50% and 66% increase in compression while retaining the same image quality — at the cost of computational complexity.

In my case, the majority of my content is already encoded using H264, so at first glance it may not make sense to consider H264 as an option but there are different ways to encode with a codec and the data deep dive will show that we can still extract quite a bit of compression by changing a few things.

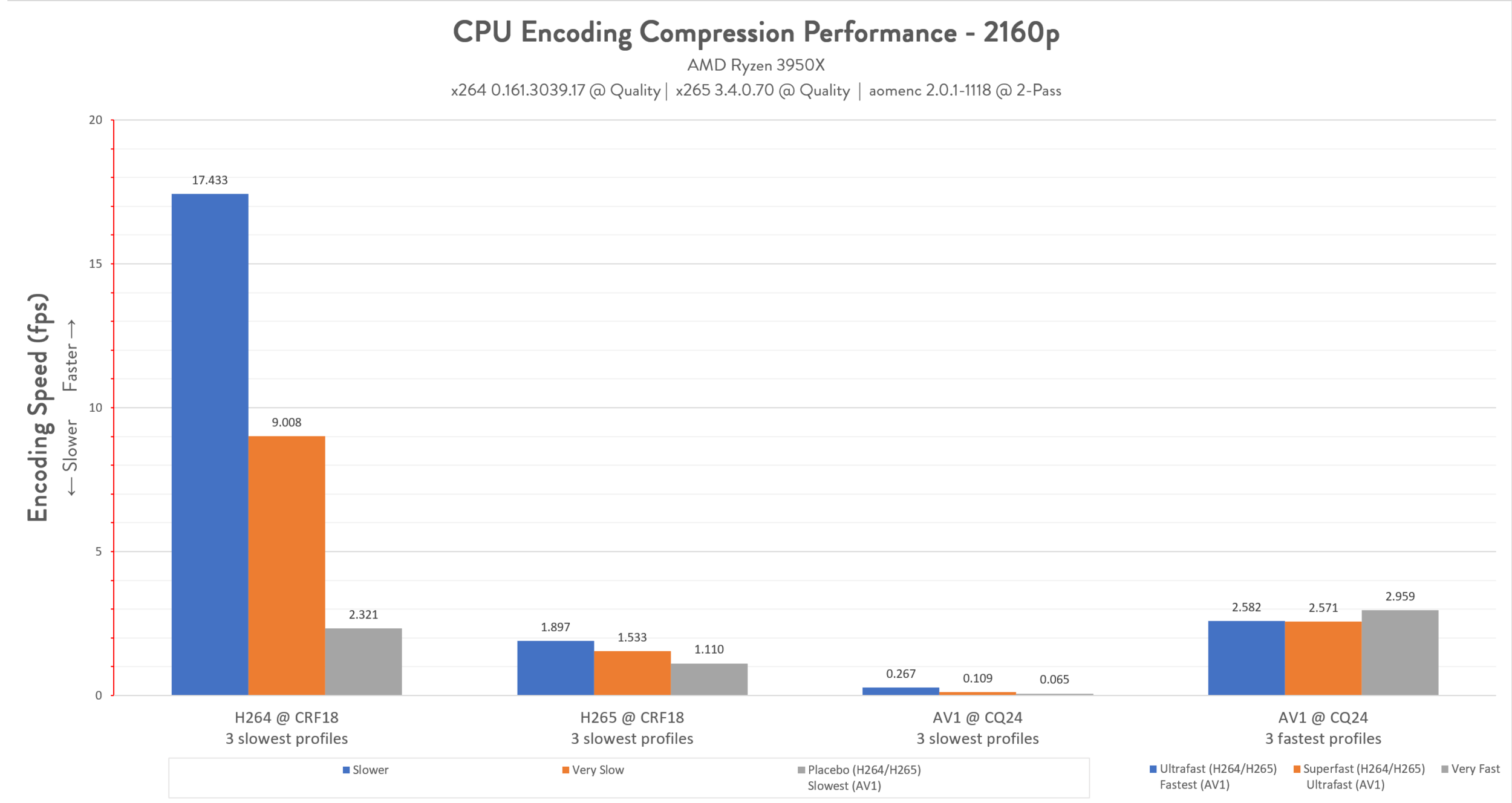

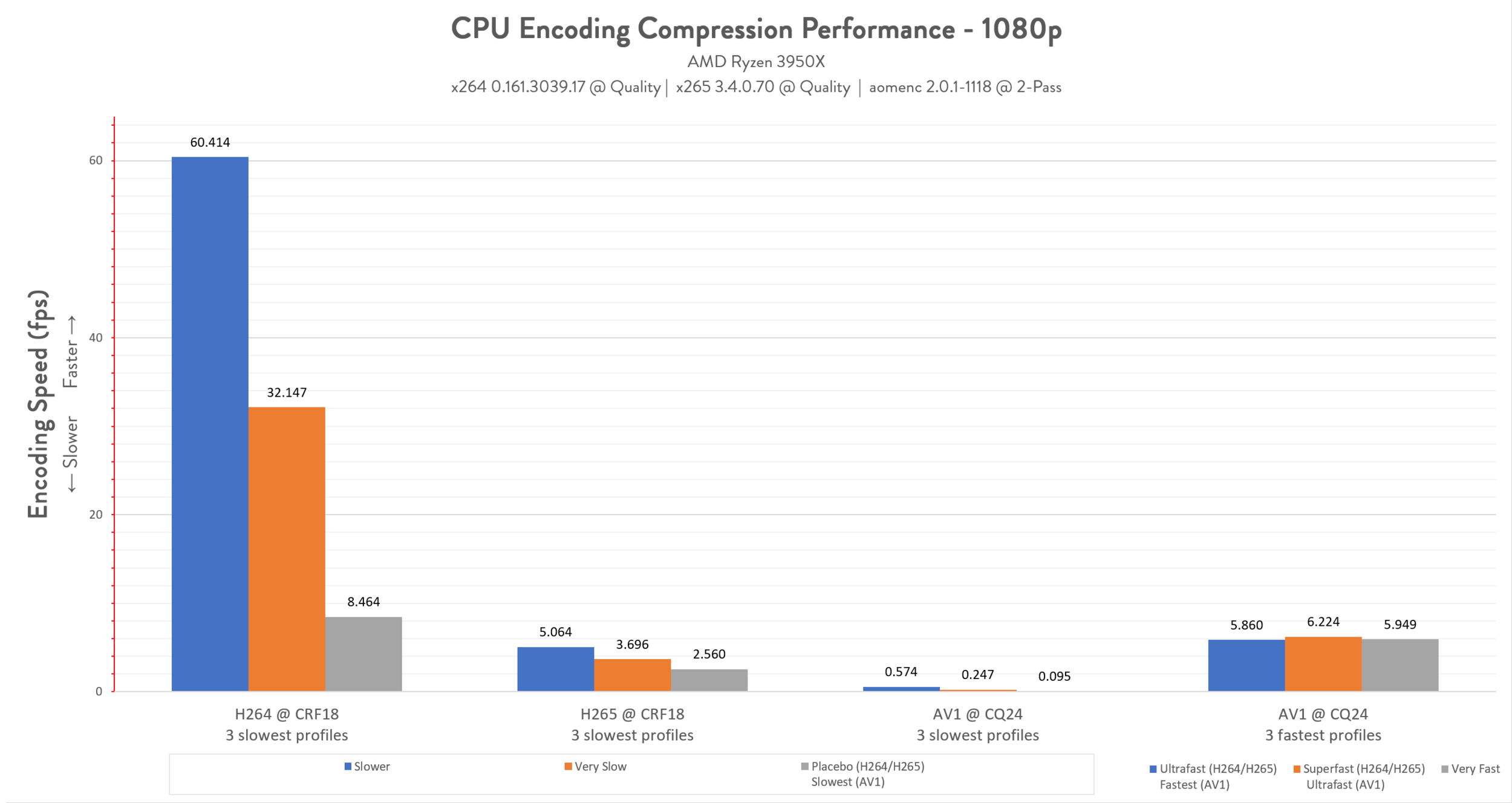

CPU encoding brings an overwhelming number of configuration options. Just like the GPU encoding process, there is a ‘quality index’ value (where lower values yield better quality) but there are also quality presets or profiles (Fast, Medium, Slow) which toggle a multitude of options at once. Generally speaking these options tweak how much time the encoder spends analyzing a scene with the idea that the more time the CPU spends, the better the image quality. There is also a fairly loose correlation with compression as well (longer analysis time = better compression). This first data set collates the metrics (compression & speed) by codec and the general ‘quality index’.

Data Deep Dive

With CPU encoding, you have an overwhelming number of options to configure and while it’s possible to select a configuration where the output file ends up being bigger than the source file, that’s much less likely to happen as the entire process is much more efficient overall. As before, it makes the most sense to look at the metrics (compression, speed, quality) with a specific target resolution in mind.

The following data deep dive is a collection of 280 encode runs capturing the following permutations:

4 resolutions (480, 720, 1080, 2160)

3 codecs (H264, H265, AV1)

3 quality levels (18, 22, 26) for H264/H265, only 1 quality level for AV1 (24) — (Note: the lower the number, the higher quality)

10 quality sublevels (Ultrafast, Super Fast, Very Fast, etc.)

Conclusions

AV1 is a performance hot mess. I was originally going to run three different passes, but it just doesn’t scale to the number of available cores. I did try some other AV1 encoders (both Rav1-e and SVT-AV1) but I ran into some fussy issues with my workflow. As I cranked the quality dial on AV1, it became more appropriate to look at performance as frames-per-minute (!!).

If you have the horsepower and the time to encode, CPU is generally a no-brainer: you get quality and compression. In particular, H265 @ Medium likely is the sweet spot for many people. At 1080 for example, you get approximately real-time encoding with very good compression

The maturity of the H264 codec is commendable; again, H264 @ Medium (or Slow) might be a great sweet spot for a lot of people if you don’t have as much horsepower to power through H265

H264 is surprisingly performant at lower quality settings, that it may be a reasonable stand-in for GPU encoding. If you can take the overall system performance hit (and likely a quality hit), you can certainly get speeds greatly exceeding GPU encoding.

All of this performance is extremely sensitive to the hardware you have at your disposal. H264 and H265 both scale exceptionally well to the number of threads you have at your disposal

TL;DR: What did I end up going with?

While I do suggest that each user come up with their own criteria for success based on their overall objectives and use representative sample videos to do some initial testing, sometimes a recommendation can be a good starting point. At the time of writing, I’ve done through about 3000 encodes.

For almost all of my encode jobs, I use CPU encoding with H265 @ CRF22 with the Slow preset

Example:

--crf 22 --preset slow --profile main --rskip 0 --refine-mv 3 --hevc-aq --aq-motion --hist-threshold 0.01 --pmode --pme --colorprim bt2020 --colormatrix bt2020nc --transfer smpte2084 --range limited --opt-cu-delta-qp --rect --high-tier

I use the Matroska file container for all of my content

I don’t transcode the audio streams, I just copy/mux the existing stream into the output. This is reduces the amount of verification I have to do and simplifies my workflow - I don’t need to worry about changing the number of channels, bitrates etc.

I do make some minor tweaks to my baseline flow for 10-bit or animated (cartoon) content.

For real-world actual encode jobs, I generally see 50-90% compression with the overwhelming bulk being between 80-85% compression (typically, low resolution & low quality content doesn’t compress well).

Closing thoughts

Over the years I’ve made a few attempts at building a data-set like this but I’ve always given up well before completing because it just took so long and I didn’t have a compelling reason to continue on with the painful process of collecting and collating all of the data. A few things have changed over the years:

I’ve got a lot more computing horsepower now (relative to the workload)

Getting good sample videos across all of the resolutions is easier: movie trailers.

They come in a variety of resolutions ,

Ae generally short,

Can be found (for each resolution) with the same starting codec,

Generally feature a variety of scenes (action, lighting etc.)

I have motivation now: phones and cameras continue to produce bigger and better footage - my available disk space isn’t really growing that fast, so I need to make the most of it

I set out to find a codec/configuration for long-term footage archival, so: ‘encode once, store forever’. Any time-cost spent transcoding footage would have forever-gains in reduced disk footprint. This gives me a bit more tolerance for slower codecs and settings that might optimize for compression and/or image quality.

This project had two really big eye openers: the performance of H264 and AV1. H264 because of the ludicrously high performance with software encoders and AV1 for the ridiculously slow performance under all scenarios. I suppose the performance gains for H264 make sense — it’s been almost 10-years since I last explored this video compression stuff and the maturity of the codec and the relative hardware has come a long way.

AV1 performance though: I starts bad and gets worse as you climb the image quality ladder. Here are actual encode times for encoding a 2min26sec clip — 3660 frames @ 4K for each codec (using the highest quality settings for each)

NVENC H265 @ CQP15: 93 seconds (1 min 33 sec)

NVENC H264 @ CQP15: 99 seconds (1 min 39 sec)

AV1 @ CQ24 + Fastest: 1357 seconds (22 min 37 sec)

CPU H264 @ CRF18 + Placebo: 1510 seconds (25 min, 10 sec)

CPU H265 @ CRF18 + Placebo: 3158 seconds (52 min, 38 sec)

AV1 @ CQ24 + Slowest: 54124 seconds (15 hours, 2 min, 4 sec)

Yes. It took me over 15 hours to encode a 2min26sec clip. Even at its best settings (least image quality), it only slightly edges out H264 at maximum quality.

I don’t have enough exposure with AV1 to dig into this further (and certainly don’t have the willpower or motivation to learn more) but at first glance there was almost no thread scaling. H264 and H265 both saturated my processor to 100% load but AV1 spent the entire time at a paltry 20% load. Even if we take an overly optimistic penalty of 5x, the AV1 encode might have taken 3.5 hours - still 3X+ slower than H265.

If we take a closer look at the AV1 performance, specifically for higher resolutions (there’s no reason to consider a codec like AV1 for low-resolution content), we can see just how poor the performance is. Even the absolute fastest AV1 barely beats the absolute slowest H265!

FAQ

What encoding software do you use? I use StaxRip.

How did you calculate the quality scores (VMAF)? You can use ffmpeg to calculate the scores directly, but I used a tool called FFMetrics in conjunction with a couple quick parsing utilities I threw together

What about Handbrake? Handbrake is great: it’s free, super friendly and easy to use. In my personal experience, I’ve found that I don’t get full CPU saturation out of it (i.e. it runs slower). I’ve heard some rumblings about Handbrake being thread-limited — I don’t know if there is any truth to it, but since I have a working solution, I haven’t had the need to look into this further.

What hardware do you run? At the time of this post, see these specs (Fury: Q1-2021)

What about AMF? or Quick Sync? At the time of writing, I don’t have a modern AMD graphics card (one with hardware encoder for H265) so I can’t test that (although I’ve heard that the encoding experience isn’t so hot). Changing the CPU to an Intel one (to get Quick Sync) would fundamentally change the characteristics of this machine so the metrics would be even less comparable.

What about codec XYZ? At this time, I don’t have a use for codecs outside of the candidates selected for this test. In the longer term, I may look at H266 or AV2 which both promise generational improvements over H265

Will I revisit AV1? Unlikely unless spot testing show monumental performance gains.

Are there any downsides to choosing H265? If your target playback device is a modern computer or phone, you should be fine; if your device is a cheap media box, then you may need to look at the specifications to see if the chip has decode acceleration (and in some cases, you’ll need to look further to check for resolution and color compatibility). Keep in mind that over time, more and more devices will come with H265 decode acceleration as well.

Why matroska and not MP4? Matroska is an open container standard that works pretty well — if your player/device can’t support it, get a better player/device. On a [more] serious note, MP4 is probably fine - I just dislike it as a personal preference.

What about 10-bit (or beyond) video? Unfortunately, I wasn’t able to find a set of trailers that had a span of resolution and bit-depth and I didn’t want to try and compare trailers for different movies (since different scenes may compress better or worse). In general, 10-bit videos will require setting --profile main10 --output-depth 10 (and so on and so forth for 12-bit etc.) and generally the encode tasks will hit a bit harder. I imagine that most of the same patterns covered by the data in this post should apply.

Related

If this post went a bit too deep (but you’re still interested in video encoding), check out the ‘starter guide’ which breaks down the handful of terms you need to know and how all the pieces fit together.